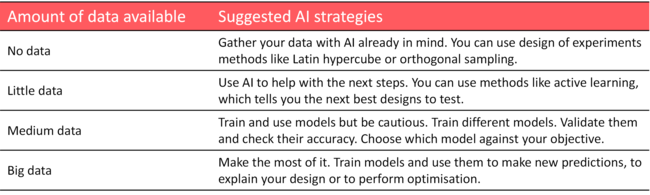

When it comes to adopting Artificial Intelligence (AI) for engineering applications, one question that is always asked is “how much data do I need?” That is a fair question, as it might feel risky to try something new and you might want to minimise the risks of failure.

Although the value you will get depends on the amount of data you have, the answer is that AI and data analysis can help your product development process at any stage, from having little or no data to having plenty.

No data

You might happen to be very new in the business and (nearly) no data is available to build Machine Learning (ML) models. This means that might take longer for you before you get all the value AI can offer. On the other hand, there is also good news: eventually, you will produce data by creating designs, running simulations, and performing tests.

As you start from scratch you can plan your data creation to match the needs of ML and AI. You can sample your data points so that it covers your entire design space. Grid sampling, Latin hypercube or orthogonal sampling are well-established methods to sample your design space. This way you are starting to generate a data base which has the needs of ML in mind from the beginning.

Little data

You might – like a lot of engineering companies – have a little amount of data, maybe because acquiring this data is too costly (expensive simulations, physical tests on a prototype, etc.). This might be historical data, or tests that you must do like those required for regulation purposes.

In that case, companies often want to reach an optimised design with a minimum number of additional tests or simulations. The truth is that even with small amount of data, AI can be extremely valuable. Methods like Bayesian optimisation and various clever acquisition functions (expected improvement, lower confidence bound, etc.) can tell you what are the most valuable next tests or simulations you should be doing to understand and optimise your product faster.

Medium data

You might have a fair amount of data (maybe a few hundred points), and you are wondering if that is enough data to train a good model. Unfortunately, there is no magic threshold number above which you are guaranteed a model with good accuracy.

Although the amount data is one of the most impactful leverage to increase accuracy, it will also depend on other things like how non-linear the problem is, how many dimensions (input parameters) there are, etc. The way to find out is to just train models and assess their accuracy on a separated test set. You might find out for example that for your problem, a random forest, or a gaussian process, might be better than a neural network.

Big data

The longer you are in the business and the more design iterations you have already done, the bigger your database becomes. You might look upon decades of engineering experience – besides having designed and produced great products for your customers you have piled up a large amount of data.

That is a treasure of unimaginable wealth. Not making use of it is like hiding your money under your pillow. You should start and make use of your data wealth. Learn from it, build strong ML models, do optimisations and improve your way of engineering. And if the data was generated without ML in mind make sure to explore it carefully as your design space sampling may not be optimal.

Conclusion

Finally, the question is not really “How much data do I need to use AI?”, but rather “What AI strategy will I follow given the data I currently have?”. And whatever strategy is suitable for your situation, Monolith AI offers a wealth of functions to assist you in every stage of your AI journey.