Engineering is a highly creative discipline. Every iteration of simulations or tests provides engineers with new learning on how to best refine their design, based on complex goals and constraints. Finding an optimum solution means being creative about what designs to evaluate and how to evaluate them.

The AI Revolution: From AI image recognition technology to vast engineering applications

When trying to build an understanding of how a non-linear and multi-variable physical system works, all engineering efforts (simulations or physical tests) are journeys to learn functional relationships by analysing data.

This data is based on ineradicable governing physical laws and relationships. Unlike financial data, for example, data generated by engineers reflect an underlying truth - that of physics, as first described by Newton, Bernoulli, Fourier or Laplace.

The fact that this data is abundant (from data collection generated by numerous design iterations) and based on fundamental scientific principles means that it can often be an excellent subject for a Machine Learning study.

Machine learning applications

Predicting the behaviour of a new design under new testing conditions, or optimising the design and conditions for a target behaviour.

Researching this possibility has been our focus for the last few years, and we have today built numerous AI tools capable of considerably accelerating engineering design cycles.

Researching this possibility has been our focus for the last few years, and we have today built numerous AI tools, using new and traditional machine learning algorithms, capable of considerably accelerating engineering design cycles.

In particular, our main focus has been to develop deep learning models to learn from 3D data (CAD designs and simulations). The early adopters of our technology have found it to be a breakthrough.

We strongly believe that the methods outlined in this article will revolutionise Engineering in a similar way that AI for facial recognition has revolutionised public security, biometric hardware authentication, and synthetic media (deepfake technology).

Up until today, engineering companies have traditionally devalued the need to form databases from the results of simulations or physical tests because of the variety of applications and the iterative/creative nature of an engineer’s day-to-day work.

Engineering information, and most notably 3D designs/simulations, are rarely contained as structured data files. Using traditional data analysis tools, this makes drawing direct quantitative comparisons between data points a major challenge.

Thankfully, the Engineering community is quickly realising the importance of Digitalisation. In recent years, the need to capture, structure, and analyse Engineering data has become more and more apparent. Learning from past achievements and experience to help develop a next-generation product has traditionally been predominantly a qualitative exercise.

This is particularly true for 3D data which can contain non-parametric elements of aesthetics/ergonomics and can therefore be difficult to structure for a data analysis exercise.

The aim of our research is to adopt and adapt algorithms from other areas of deep learning such as image processing to create expert AI systems that can learn from 3D Engineering data, for design validation and performance-based optimisation.

AI image recognition technology & image recognition applications

For a clearer understanding of AI image recognition, let’s draw a direct comparison using image recognition and facial recognition technology.

Using deep learning algorithms such as Generative Adversarial Networks (GANs), it has become easy for companies such as Facebook or Apple to recognise which of your friends is featured on your latest profile picture, or to unlock your iPhone with your face via AI image recognition technology.

These image recognition algorithms can automatically identify objects, and have gathered learning from millions of images (or point clouds), from which they were able to identify the distinct features from a target image which characterise a human face.

In a deep neural network, these ‘distinct features’ take the form of a structured set of numerical parameters. When presented with a new image, they can synthesise it to identify the face’s gender, age, ethnicity, expression, etc.

Beyond simply recognising a human face through facial recognition, these machine learning image recognition algorithms are also capable of generating new, synthetic digital images of human faces called deep fakes.

With new combinations of the numerical parameters which represent the distinct physical attributes that characterise a human face, highly realistic images (incluidng unique facial expressions) can be generated by using data from AI image recognition technology.

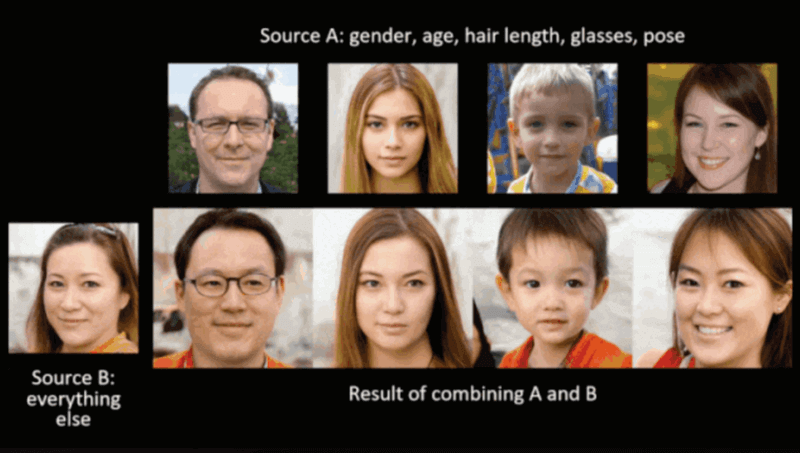

It is, for example, possible to generate a ‘hybrid’ of two faces or change a male face to a female face using AI facial recognition data (see Figure 1).

Figure 1: Open-Source Face Generator 'StyleGAN', by NVIDIA

The same AI image recognition concept can be applied to engineering data, with 3D CAD files as the data to synthesise and performance/quality metrics as the attributes to recognise (design validation) or optimise against(generative design).

There are added challenges to developing these methods for 3D Engineering data, among which are the need to structure individual CAD data files and associate them with the boundary conditions in which they were simulated.

Compared to image processing, working with CAD data also requires higher computational resource per data point, meaning there needs to be a strong emphasis on computational efficiency when developing these algorithms.

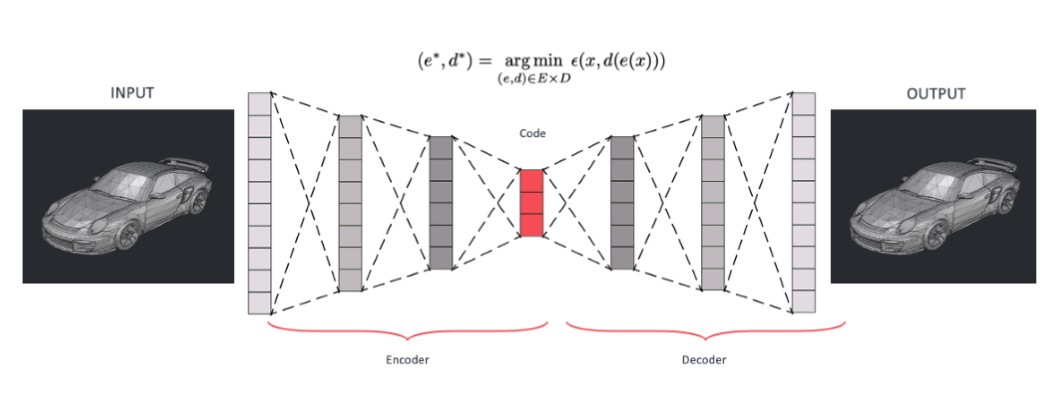

Figure 2 shows an image recognition system example and illustration of the algorithmic framework we use to apply this technology for the purpose of Generative Design.

First, a neural network is formed on an Encoder model, which ‘compresses’ the 3Ddata of the cars into a structured set of numerical latent parameters. We like to call this the ‘3D DNA’ of geometry.

Then, a Decoder model is a second neural network that can use these parameters to ‘regenerate’ a 3D car. The fascinating thing is that just like with the human faces above, it can create different combinations of cars it has seen making it seem creative.

In the context of Generative Design, the Encoder model can be used for design validation: making a performance prediction for the outcome of a new test or simulation, and the Decoder can be used for design optimisation: generating new 3D geometries which satisfy target performance attributes.

Figure 2: Overview of an Autoencoder's deep learning network structure.

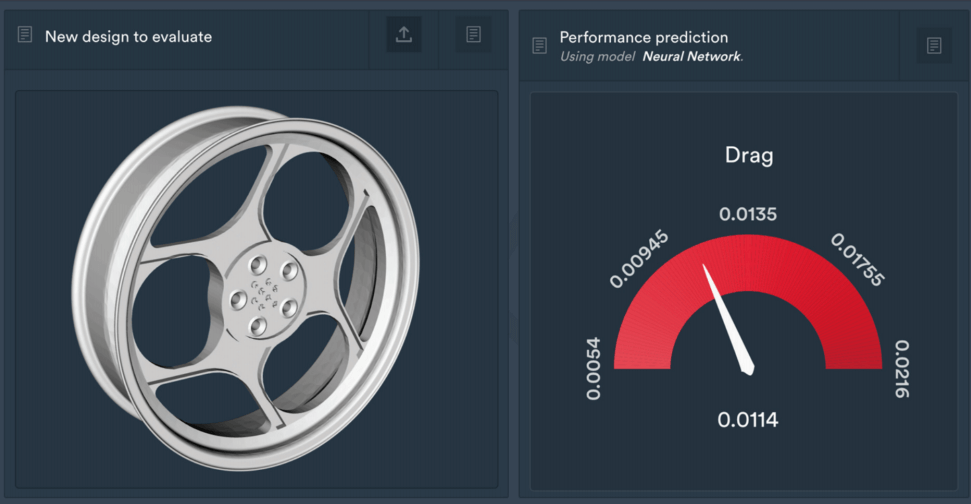

As an example of design validation using this technology, Figure 3 shows a prediction for the contribution to a vehicle’s drag coefficient from a wheel design.

The deep learning Encoder can scan the 3D data, identify its geometric DNA and make an instant prediction for a particular object based on a dataset of historic wind tunnel tests.

Figure 3: A prediction for Drag is made on an encoded 3D design of a wheel, using a neural network trained on historic wind tunnel data.

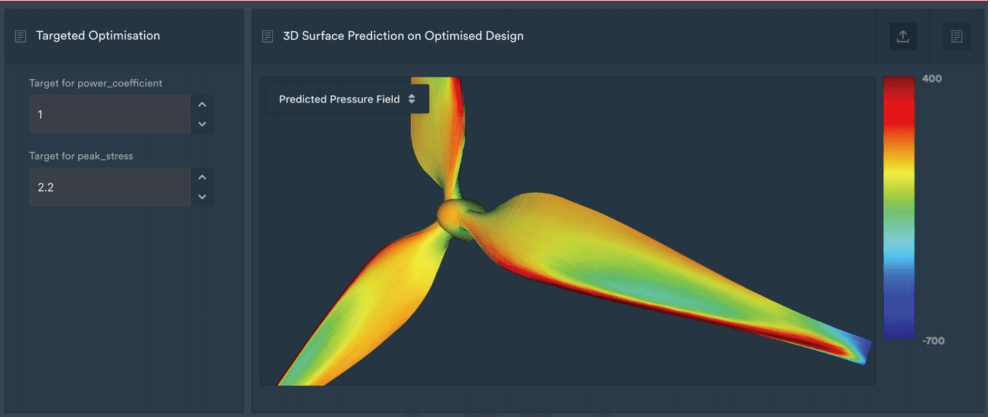

Figure 4: An optimum 3D geometry of a wind turbine is generated according to custom performance attributes. The surface pressure field is also predicted.

As an example of deep learning design optimisation, Figure 4 shows a performance-optimised 3D CAD model of a wind turbine that has been fully generated with significant processing power and artificial intelligence.

In this case, the pressure field on the surface of the geometry can also be predicted for this new design, as it was part of the historical dataset of simulations used to form this neural network.

This new method of evaluating and optimising 3D designs has properties that no other generative deep learning algorithm has: it is not based on PDE solvers and it has no hard-coded functions. Instead, it purely learns from human inspiration.

Engineers have spent decades developing CAE simulation technology which allows them to make highly accurate virtual assessments of the quality of their designs.

Our new deep learning methods do not replace these tools – on the contrary: the AI algorithms will be as good as the data they learn from.

In other words, the engineer’s expert intuitions and the quality of the simulation tools they use both contribute to enriching the quality of these Generative Design algorithms and the accuracy of their predictions. Therefore, we talk about ‘Human-Inspired Generative Design’.

This also means these algorithms are compatible with any simulation software and are applicable to virtually any engineering problem for which there exists a structured dataset of historic tests (or an automated process to generate simulation data in batch), including Computational Fluid Dynamics (CFD) applications.

As the artificial intelligence algorithms are ‘trained’ on existing engineering experience, they can learn simulation outcomes (such as aerodynamic forces) but also past judgment calls from engineering experts (such as visual manufacturability assessments).

Perhaps even more impactful is the new avenues which adopting these new methods can open for entire R&D processes. Engineers need fewer testing iterations to converge to an optimum solution, and prototyping can be dramatically reduced.

We strongly believe that in the age of Digitalisation, with ever-so-accurate virtual prototyping tools, and with ever-so-complex engineering systems to design, a Siri or Alexa-esque machine learning or artificial intelligence Assistant for Engineers will become increasingly feasible and increasingly necessary.

Authors

Dr Richard Ahlfeld graduated summa cum laude with a double degree in Aerospace and Applied Mathematics from Delft University of Technology. He went on to complete a PhD in Machine Learning for Aerospace Engineering at Imperial College London during which he worked at NASA on the Mars rocket. Richard is the CEO and Founder of Monolith AI.

Marc Emmanuelli graduated summa cum laude from Imperial College London, having researched parametric design, simulation, and optimisation within the Aerial Robotics Lab. He worked as a Design Studio Engineer at Jaguar Land Rover, before joining Monolith AI in 2018 to help develop 3D functionality. Monolith AI’s software platform is currently being used by engineering companies such as BMW, Honda, L’Oréal and Siemens, for applications ranging from predicting the outcome of wind tunnel or track tests, to generating performance optimised 3D CAD designs with AI.