Many engineering companies have already adopted AI to minimise their R&D effort when validating or optimising designs. However, adopting AI doesn’t simply involve building and deploying machine learning models.

To take full advantage of an AI solution at scale, it will need to fit into your overall engineering workflow. Here are a few guidelines on the signals that show how adopting a more data-driven workflow will benefit you, how to evaluate a suitable 'AI fit', and how to update your workflow management strategy accordingly.

Engineering workflow management: before the adoption of AI

There are several reasons contributing to traditional, inefficient engineering workflow management strategies:

- Knowledge isn’t being retained. The results of simulations or tests carried out during development aren’t being captured, meaning very little knowledge is being retained for future generations of designs, developed by future generations of engineers.

- It can often feel like an iterative guessing game. How should you change your design to improve its performance? What is the relative importance of design parameters? How to find an optimum when considering multiple goals and strict constraints? These are the questions that engineers are failing to quantify.

- You often need to start from scratch. Your team has worked on refining a design for the last month around a narrow set of goals and constraints from other departments. What if these requirements suddenly change? It can mean going back to square one. You will still encounter this issue with the use of traditional design space exploration tools since the design requirements for optimisation campaigns need to be defined upfront.

- It involves a lot of manual effort. Because of the iterative nature of traditional engineering workflows, a lot of an engineer’s time is spent setting up repetitive simulations or empirical testing, analysing and preparing reports for one result at a time, and trying to align with other departments despite the uncertainty of how changes in the design will affect its quality or performance.

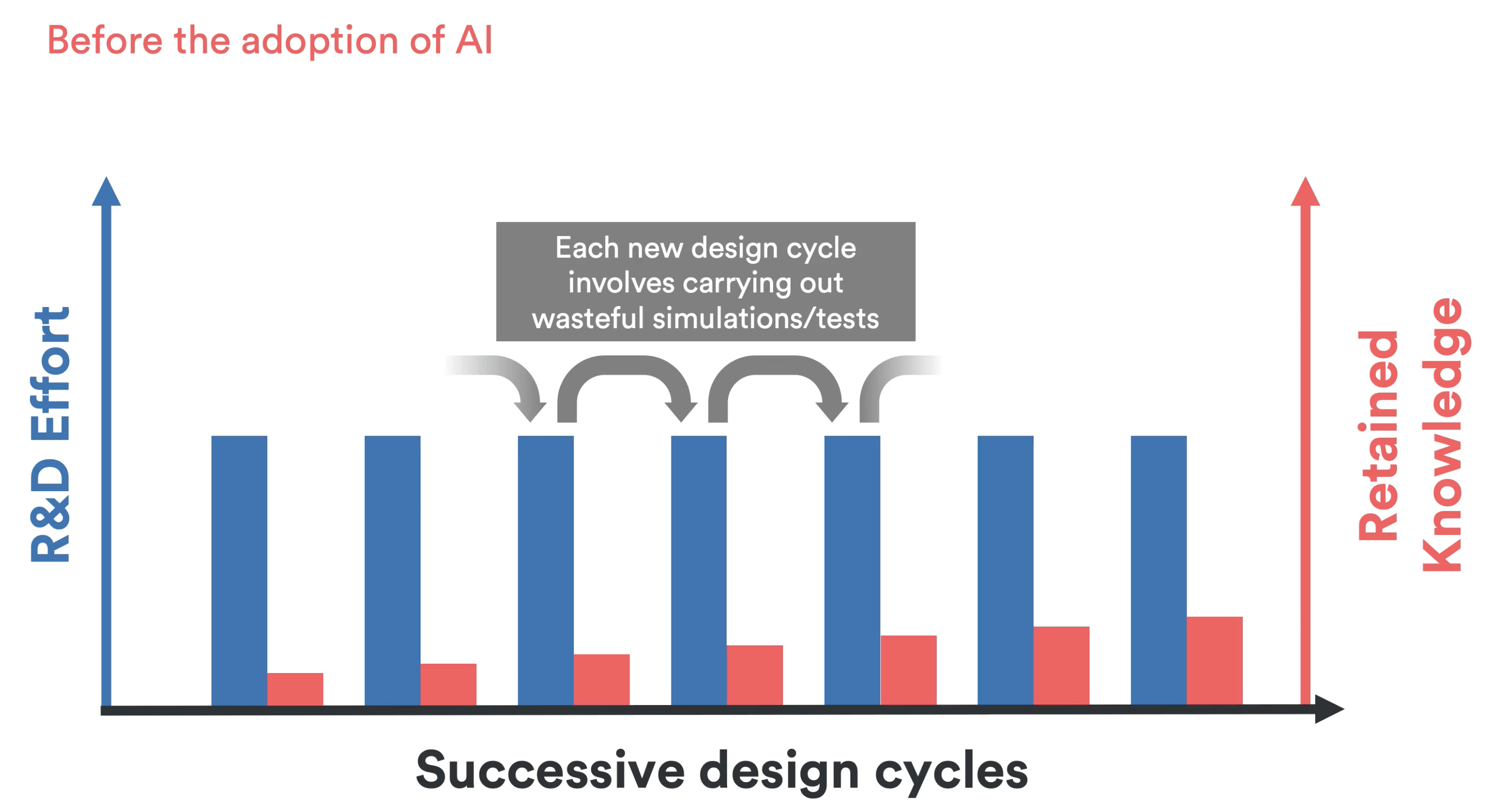

In traditional engineering workflow management system, design cycles are slow and little R&D knowledge is retained from one to the next.

What to aim for in engineering workflow management: data-driven R&D

Companies that have fully incorporated the benefits of AI into their engineering workflows can derive insight from data, which accelerates their product development processes. Here are a few characteristics of this end goal:

- It isn’t replacing how each test or simulation is carried out, but instead reduces the total amount needed as part of a design cycle. Engineering organisations have spent decades refining ways to assess the quality and performance of their designs. It is the quality of this data, describing complex physical behaviour, which enables machine learning models to be built.

- It allows you to instantly predict and optimise the performance of your designs. Using historical data, an AI model can learn the relationships between design variables and test/simulation results. This enables you to quickly build a global understanding of your physical engineering system. For a new design, you’ll be able to predict the simulation or test outcomes. For a new set of goals and constraints, you’ll instantly find optimal designs. No more iterative guessing game, and no more starting from scratch.

- Effective communication of design requirements, test/simulation results, and performance trade-offs are key element of an efficient product development process. By building AI solutions collaboratively and deploying them to colleagues or customers, knowledge and insight can be shared instead of being retained in the minds of a handful of experts.

How to get there: initial investment in effective engineering workflow management

Adopting AI will reduce R&D effort over time and increase retained knowledge (in engineering workflow management and beyond).

No engineering company can adopt AI at scale overnight and reap benefits the next day.

Improving engineering workflow management with AI adoption and ML solutions requires some adjustments to your existing engineering processes and workflow, including setting up repeatable processes to generate and capture data from your simulations or tests.

This solution can present an important upfront investment.

However, our experience with customers has shown that after initially setting up and adopting these newly defined ways of working, overall R&D effort quickly reduces in subsequent design cycles.

Let’s describe this in more detail, for two different types of product development processes using differing engineering workflow management strategies.

Engineering workflow management: evolutionary designs

You might carry out comparable tests or simulations from one design cycle to another, on a family of products for which the overall design concept doesn’t change.

For example – CAE validation of new wheels, compliance testing of aerosols for new chemicals, or manufacturability assessments to stamp new aluminium doors.

In this case, a strong enabler for the adoption of AI is to capture and structure historical data - and new data on an ongoing basis.

This means adjusting workflow management for systematically recording the design characteristics, test conditions, and test results.

There are many data acquisition and Product Lifecycle Management tools which enable companies to record R&D efforts easily and consistently, to form centralised databases of historical data.

Machine learning models can learn from this retained knowledge and provide insight to speed up product development processes within workflow management strategies.

As more data is captured, your datasets grow, the AI models are then retrained with an augmented wealth of knowledge, the predictions then become more accurate, and your design cycles are accelerated even more.

However, you need to ensure that your data is structured in an “AI friendly” way to make the most of the historical or parametric designs (see this article by our Principal Engineer Dr Joël Henry to find out more on this).

This virtuous circle has enabled some of our clients to eliminate the need for prototyping and physical testing entirely, and instead provide enterprise apps powered by AI to their customers who can benefit from instant quotations on the compatibility of packaging products to new requirements.

An important point to note is that engineering data can naturally contain complex nuanced 3D designs, which are often difficult to quantitatively compare against one another.

Thankfully, Monolith AI has developed Autoencoders, which are 3D deep learning models capable of automatically parameterising datasets of 3D CAD files.

This enables the 3D CAD files to be represented as a structured set of data from which to build predictive AI models. Visit the Features page of our website to find out more.

Engineering workflow management and revolutionary designs

Engineering, and engineering workflow management, sometimes involves developing totally new design concepts which might not be directly comparable to previous work.

In this case, the enabler for the adoption of AI will be to use re-usable methods to automate running simulations in batches.

Many process automation CAE tools such as Siemens Design Manager enable simulation engineers to evenly sample a design space (varying geometric parameters and boundary conditions) to generate a dataset of a batch of simulation results.

The upfront investment in this case is one of computational resources rather than manual effort. The knowledge which is retained is the ability to parameterise design concepts and use a data generation framework to build surrogate models.

This will remove the need for simulation iterations, allow you to be more responsive to change in requirements, and therefore benefit from better alignment with other teams through improved engineering workflow management.