Battery technology is at the heart of numerous innovations, from electric vehicles (EVs) to renewable energy storage. As the demand for more powerful, longer-lasting batteries continues to grow, so do the challenges associated with testing and optimising them.

It is quite an understatement to say that batteries are complex systems, as it is almost impossible to grasp all the intricate design objectives such as lifespan, cost, safety, and many other parameters that go into developing battery systems.

Engineers are faced with a delicate balance of conflicting goals, including regulatory requirements, certification standards, and customer demands. However, most of the physics-based tools available to engineers today often fall short of finding optimal solutions within the vast design space.

The primary focus of this blog is how AI's predictive capabilities are reshaping the way engineers conduct battery system tests, a crucial step in the tedious validation process. The baseline of this research is grounded in a collaboration between Stanford, MIT, and the Toyota Research Institute, aiming to find the best method for charging an electric vehicle (EV) battery in just ten minutes while maximising the battery's overall lifespan.

Maximising battery performance and lifespan with AI: insights from Stanford and MIT

One example of the power of machine learning in battery applications is the research conducted by Stanford University. In a paper published in the journal Nature, researchers from Stanford, MIT, and Toyota Research used a combination of machine learning models to identify the optimal charging protocol for lithium-ion batteries.

New technologies must be tested for months or even years at every stage of the battery development process to determine how long they will last. The team has developed a machine learning-based method that slashes these testing times by 98%.

Although the group tested their method on battery charge speed, they said it can be applied to numerous other parts of the battery development pipeline and even to non-energy technologies.

This research is a fantastic example of the power of machine learning in battery applications. By analysing large amounts of data quickly and accurately, machine learning algorithms can identify patterns and trends that may not be immediately apparent to humans.

This can lead to significant improvements in battery performance, lifespan, and cost, making it an essential tool for engineers working in the battery industry. This approach opens new areas to optimise battery systems, including the charging-discharging strategy, battery pack design, and lifetime aging applications.

Understanding the basics of data collection for machine learning

One of the key aspects of machine learning is data collection. Engineers primarily gather data through the design of experiments (DoE) which involves conducting tests and experiments to collect data on various battery parameters, such as temperature, charging speed, and lifetime.

A typical DoE involves selecting a set of input parameters, or factors, that are believed to affect the output of interest, or response. These factors are then varied systematically, either one at a time or in combination, to observe the resulting changes in the response. The goal of a DoE is to identify the most important factors that affect the response and to determine the optimal settings for these factors that maximize the response.

In practice, a DoE can take many different forms depending on the specific application and the number of factors involved. Some common types of DoE include full factorial designs, fractional factorial designs, and response surface designs. Each of these designs has its own strengths and weaknesses, and the choice of design will depend on the specific goals of the experiment and the available resources, but in general, these often require significant time and cost investments as well as space for enough test facilities. It is essential to carefully design experiments to ensure that the data collected is accurate and relevant to the problem at hand.

This involves selecting appropriate test conditions, measuring the correct parameters, and ensuring that the data is collected in a consistent and reproducible manner. In addition to traditional experimental methods, there are also classical techniques for data collection in battery applications.

For example, the use of sensors and other monitoring devices to collect real-time data on battery performance. This can provide valuable insights into how batteries behave under different conditions and can help to identify potential issues before they become critical.

By carefully designing experiments and collecting high-quality test data, engineers can train accurate and reliable machine learning models that can help to reduce testing time and cost and improve battery performance and lifespan without the need to test too much or testing too little, without jeopardising the safety of the battery system.

How machine learning can improve battery testing

Machine learning offers a multifaceted approach to enhance battery testing methodologies. To begin with, it streamlines the testing process, significantly reducing both time and costs. This optimization stems from its ability to predict battery performance and lifespan using a smaller dataset.

Machine learning models are trained on extensive repositories of battery performance data, enabling them to forecast how batteries will respond to varying conditions accurately. Consequently, engineers can curtail the number of tests required to validate battery designs, leading to substantial savings in terms of both time and resources.

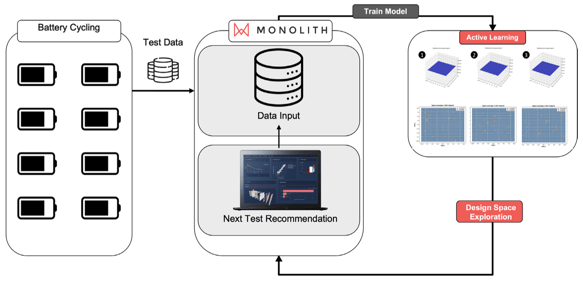

The Next Test Recommender uses robust active learning algorithms to iteratively explore and recommend which tests are most important to run next for your design. Using data-driven self-learning models, test engineers can run fewer tests yet be more confident in test coverage (Source).

Moreover, machine learning excels at uncovering intricate patterns and correlations that may elude human observation. These insights empower designers and engineers to operate more efficiently, directing their attention to the most pivotal parameters for testing. By pinpointing the critical factors, machine learning assists in fine-tuning battery designs and optimizing the battery’s lifetime.

Lastly, machine learning applications extend to optimizing battery charge and discharge protocols by predicting the outcomes of extensive testing and guiding the selection of appropriate testing procedures. This predictive approach ensures that engineers can fine-tune battery designs for optimal longevity.

Machine learning plays a pivotal role in enhancing battery performance and safety by not only predicting outcomes of extensive testing for optimal charge and discharge protocols but also utilizing advanced sampling strategies that aim to reduce uncertainty, focus on unexplored areas, and pre-emptively identify potential failure modes and safety risks, thereby ensuring the reliability and security of battery systems while optimizing their design and operation.

Using the NTR algorithms in Monolith, the team was able to show reductions in the number of tests required for identifying battery lifetime and finding the optimal charging cycle by 59% and 73% respectively. Clearly, applying active learning AI techniques to the battery testing challenge shows great potential to reduce the overall time and money spent on tests and accelerate time to market.

Monolith: machine learning for battery testing

Monolith recently unveiled its groundbreaking product update, the Next Test Recommender (NTR). This innovative feature provides active recommendations for R&D tests during the development of challenging products such as batteries and fuel cells.

Unlike random sampling (2 in the middle) and single active learning (AL) method (3, on the right), the robust combination of AL methods (1, on the left) ensures a safe space coverage and a focus on critical regions.

The integration of robust active learning algorithms into our Next Test Recommender (NTR) marks a momentous stride in the realm of product development, particularly within safety-critical domains like battery systems.

By harnessing the power of Robust Active Learning, Monolith empowers engineers to make strategic decisions regarding test prioritization, focusing on those tests with the greatest potential to influence battery performance positively.

This approach not only curtails testing durations but also delivers significant cost savings, revolutionizing the way we approach safety-critical product development.

In a complex engineering scenario, exemplified by a demanding powertrain development task, an engineer grappled with configuring an optimal cooling fan, entailing an exhaustive test plan of 129 trials. However, with Monolith's Next Test Recommender (NTR) in their toolkit, the results were nothing short of astounding.

NTR, propelled by cutting-edge active learning technology, not only prioritized the sequence, remarkably suggesting that test number 129 should be among the first 5 to run but also determined that a mere 60 tests sufficed to characterize the fan's performance comprehensively. This translated into a staggering 53% reduction in testing efforts, a testament to NTR's prowess in optimizing testing strategies and enhancing efficiency, as demonstrated in the news release.

Best practices for implementing machine learning in battery testing

When it comes to implementing machine learning in battery testing, engineers should keep several best practices in mind. The initial step is gaining a comprehensive grasp of the problem you aim to address and the data at your disposal. The extent of data availability significantly influences the path you should tread in your machine learning journey.

First, beginning from scratch, with no prior data, entails a different set of challenges and considerations. In such cases, you might need to focus on data collection strategies, setting up data pipelines, and ensuring data quality. Starting with a clean slate allows you to tailor your approach without being constrained by existing data patterns.

Second, when you possess a dataset, even if it's relatively small, you can leverage existing information. This means you can explore techniques such as supervised learning, where the algorithm learns from labelled data to make predictions. Larger datasets open doors to more complex models and deep learning architectures, which thrive on vast volumes of data to uncover intricate patterns. It's important to have a robust data management system in place to ensure that your data is clean, organised, and easily accessible. This will help you to train accurate machine learning models and make informed decisions based on your data.

Third, the nature of your data matters. Is it structured, unstructured, or semi-structured? Understanding this aspect informs the choice between available machine learning algorithms and the effectiveness of your method.

Fourth, it's important to have a team of experienced engineers who help design and help adopt AI in your organisation. Machine learning is a complex field that requires specialised knowledge and expertise, so it's important to have engineering subject matter experts on your team who understand the basics of AI and how it can be used to understand complex physical systems.

Fifth, it's important to continuously monitor and evaluate your machine learning models to ensure that they are accurate and up to date. Machine learning models can become out of date and obsolete over time as new data becomes available, so it's important to regularly retrain your models and update your algorithms as needed, what we at Monolith commonly refer to as self-learning models.

Finally, it's important to have a clear plan for how you will integrate your machine learning solution into your existing testing processes. This may involve developing new testing protocols, training staff on new tools and techniques, and integrating machine learning into your existing data management systems.

By following these best practices, you and your team of engineers can successfully implement machine learning in battery testing and achieve significant improvements in testing time, cost, and performance.

Conclusion

NTR is reshaping the landscape of battery testing, offering unprecedented insights and efficiency. Monolith, with its active learning technology applied in real-world success stories, exemplifies the potential of this revolutionary approach.

It’s time for engineers and researchers to embrace machine learning as a vital tool in their pursuit of safer, longer-lasting, and more efficient batteries. As battery technology continues to evolve, the synergy between human expertise and machine intelligence will drive us toward a future where energy storage solutions are becoming both powerful and sustainable.