Author: Aadam Khan, Engineering Outreach Rep, Monolith

Read Time: 10 mins

The Most Successful Projects Of 2025

In 2025, we worked on close to a hundred AI projects and proposals across automotive, aerospace, motorsport, and battery development. This included work with global OEMs such as Nissan, advanced aerospace programmes at Vertical Aerospace, and high-performance racing teams including JOTA Sport and PREMA Racing.

Across trade shows, technical workshops, and live programmes, engineers came to us seeking our expertise on where AI was delivering real results in engineering. Which applications were being trusted? Where was test effort genuinely being reduced? And which approaches were scaling beyond pilots?

The use cases highlighted here are the areas where we saw the strongest and most consistent demand throughout 2025. These are the applications engineering teams repeatedly explored, adopted, and scaled as they delivered measurable value and showed a credible path to enterprise-level adoption.

How We Identify AI Use Cases That Actually Work in Engineering

The use cases featured here were selected because they repeatedly appeared in live engineering programmes and delivered outcomes that teams were willing to act on.

They stood out for three reasons:

- Proven technical readiness

These applications worked with today’s data, infrastructure, and test environments. They ran inside live programmes without requiring major data refactoring, additional workflow disruption, or research-level intervention.

- Clear, demonstrated impact

Each use case delivered measurable engineering outcomes, such as reduced physical testing, shorter validation cycles, improved confidence in data, or more effective use of constrained test capacity.

- Repeated demand from the field

These topics surfaced again and again in customer projects, technical workshops, and conversations at trade shows. Teams were not debating whether these problems mattered, but how others were solving them and what was working in practice.

Taken together, this is why these use cases are worth paying attention to. They reflect where AI is already being trusted by experienced engineering teams, and where adoption is moving from isolated pilots into standard operating practice.

Use Case 1: Accelerating ECU Calibration with AI

Using AI to reduce physical test effort in combustion engine ECU (Engine Control Unit) calibration was one of the most consistently successful applications we saw in 2025. As powertrain systems become more complex, calibration teams are dealing with larger parameter spaces, tighter constraints, and pressure to make decisions earlier in the V-cycle, all with limited dyno and vehicle test capacity.

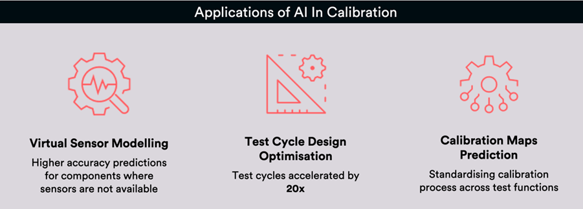

Rather than replacing established calibration workflows, AI was applied directly to real dyno and vehicle data to reduce unnecessary physical testing. Across multiple programmes, three applications proved effective: virtual sensor models for hard-to-measure variables, AI-guided test cycle design to prioritise informative operating conditions, and data-driven generation of first-pass calibration maps.

Figure 1: AI applications within ECU calibration that shift learning earlier in the V-cycle and shorten validation timelines under constrained test capacity.

Models trained on test data enabled engineers to accurately estimate variables such as exhaust temperatures to reduce reliance on additional instrumentation. Built-in explainability allowed predictions to be checked against physical expectations instead of being treated as black-box outputs. In parallel, AI-guided test planning shortened validation cycles by focusing testing on conditions that delivered the most information.

Teams reduced physical test effort, shortened validation timelines without sacrificing engineering confidence, and improved the quality of early calibration outputs. Importantly, these methods integrated into existing calibration workflows rather than sitting alongside them as separate analytics tools.

For calibration teams constrained by test capacity and programme timelines, this proved to be a deployable and repeatable use case.

These applications are explored in more detail in our AI for System Calibration white paper.

Read the full Calibration White paper here.

Use Case 2: AI-Driven Test Plan Optimisation That You Can Trust

Test plan optimisation is not a new concept. Engineering teams have been discussing it for years, and many have tried DoE, rule-based prioritisation, or optimisation tools with limited success.

In practice, adoption often stalled because recommendations were difficult to trust, hard to validate against physical behaviour, or failed to translate into actual reductions in physical testing.

What shifted in 2025 was not the theory, but the willingness of teams to act on the output. Across multiple projects, we saw AI-driven test prioritisation move from analysis support to an input used to make real test reduction decisions.

This was evident in our work with Nissan Technical Centre Europe. Each vehicle programme involved hundreds of bolted joint tests across torque ranges, load profiles, and edge cases, all competing for limited rig availability and tight delivery timelines.

Historically, broad coverage was the safest option, even when it absorbed significant time and capacity. As system complexity increased, that approach became increasingly difficult to sustain.

Rather than executing full test matrices, machine learning models were trained on nearly 90 years of historic test data to identify conditions most likely to expose uncertainty or non-linear behaviour.

Importantly, model outputs were not treated as directives. Engineers reviewed predictions against known physical behaviour and used confidence estimates to determine where physical testing was still necessary.

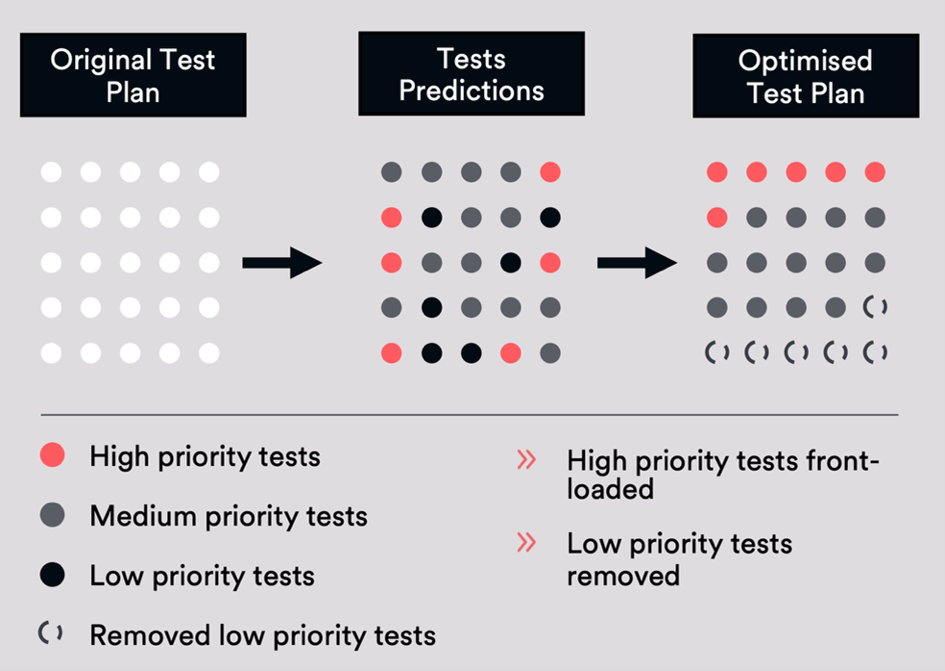

Figure 2: AI-guided restructuring of a test plan based on predicted information value.

Test plans were rebuilt around expected information gain rather than blanket coverage. High-risk cases were front-loaded, while low-impact tests were safely deprioritised or removed.

This delivered a 17% reduction in physical testing while maintaining required safety margins, freeing up rigs, technician time, and engineering capacity without compromising validation integrity.

As development timelines tighten and test capacity remains fixed, this marks a meaningful shift from theoretical test plan optimisation to operational use.

We explore this application in more detail in the Nissan Test Plan Optimisation case study, including how predictions were validated, how risk was managed, and how test reduction was accepted in practice.

Beyond this programme, we observed the same approach applied in race car setup and battery cell testing, with recommendations used to guide effort rather than dictate outcomes. These are outlined in greater detail later in this blog.

Use Case 3: Reducing Motorsport Testing Without Compromising Decision Quality

Motorsport engineering is an unforgiving environment for data-driven methods. Wind tunnel time is limited, track testing windows are brief, and setup configurations must be locked in ahead of defined change deadlines.

Engineers are required to extract reliable insight quickly, often before the next session or race weekend. In this environment, unreliable data or overconfident models carry immediate performance risk, making motorsport a practical proving ground for approaches that claim to reduce testing without sacrificing decision quality.

In 2025, we saw AI applied successfully in two specific areas: reducing physical test effort during setup optimisation, and improving confidence in telemetry used for race-critical decisions.

Reducing Setup Testing at JOTA Sport

At JOTA Sport, aerodynamic and vehicle setup decisions are traditionally driven by extensive wind tunnel and track testing. These campaigns are costly, time-intensive, and often repetitive, yet engineers still require high-resolution performance maps to evaluate trade-offs between configuration parameters.

To address this, interpretable machine learning models were trained directly on their data to capture the relationship between adjustable parameters, such as ride height and aerodynamic elements, and outputs including front and rear downforce and drag. The emphasis was on models that remained stable within the operating envelope and could be interrogated by engineers.

By validating model behaviour against known physical trends and rejecting regions where extrapolation became unreliable, engineers were able to explore configuration changes virtually and prioritise only the most informative physical tests. In practice, this reduced their test effort by up to 80% while maintaining comparable accuracy in performance maps used for decision-making.

Figure 3: Monolith platform notebook showing data-driven performance models used to evaluate setup configurations in a motorsport environment.

Improving Telemetry Trust at PREMA Racing

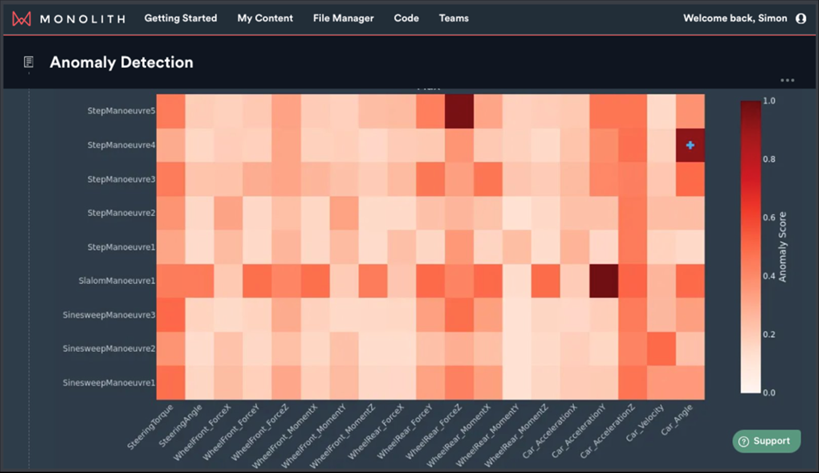

At PREMA Racing, the constraint was different. Large volumes of high-frequency telemetry are collected during testing and race weekends, but data quality is often the limiting factor.

Sensor faults, signal dropouts, or subtle anomalies can propagate through analysis pipelines and distort conclusions.

Here, anomaly detection was applied across multiple telemetry channels to learn normal operating behaviour and automatically flag deviations as they occurred. This allowed engineers to identify suspect signals early, isolate data quality issues, and prevent unreliable data from influencing downstream analysis during time-critical sessions.

Figure 4: Anomaly detection interface highlighting abnormal patterns across multiple telemetry channels during different on-track manoeuvres, supporting rapid data validation in a motorsport environment.

This addressed a foundational bottleneck in motorsport engineering: the ability to trust the data driving decisions when there is little margin for error.

Together, these projects show how AI can support different aspects of motorsport engineering, from reducing reliance on repetitive testing to strengthening confidence in the data underpinning time-critical decisions.

The constraints of motorsport make it a demanding environment, but also a useful reference point for applying similar approaches in other high-pressure engineering domains.

Use Case 4: Using AI to Shorten Battery Chemistry Iteration Cycles Under Fixed Lab Capacity

Battery chemistry development has always been constrained by long test cycles, limited lab throughput, and a design space where improvements in one metric often come at the expense of another. As a result, progress is typically driven by cautious iteration rather than rapid exploration.

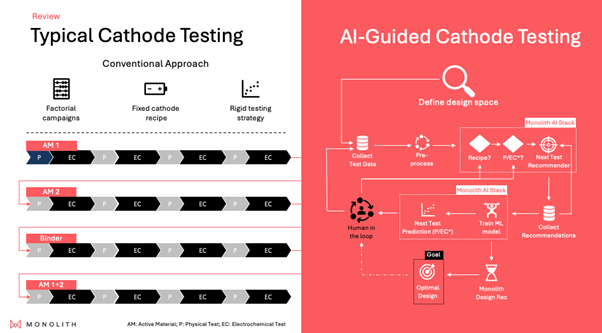

In 2025, we saw AI applied successfully to shorten this iteration loop without weakening experimental rigour. In a project with an OEM battery development team focused on LFP cathode optimisation, machine learning models were trained on formulation and processing variables alongside measured performance outcomes.

The objective was not to predict final chemistry choices, but to reduce the number of experimental cycles required to reach confident decisions.

Engineers used the models to explore candidate formulations virtually, compare trade-offs across competing objectives, and eliminate low-value regions of the design space before committing lab resources.

This allowed physical testing to focus on combinations most likely to resolve uncertainty or clarify performance trade-offs.

Figure 5: Comparison between conventional cathode testing and an AI-guided workflow, showing how data-driven modelling and next-test recommendation accelerate exploration of the cathode design space.

The workflow extended beyond modelling. Model-guided experiment selection was used to recommend the next most informative tests, prioritising experiments expected to reduce uncertainty rather than repeating fixed test matrices. This shifted lab effort from broad coverage to targeted learning.

In practice, the team used the approach to design a revised test plan that met the required performance criteria while exploring the cathode design space with half the number of physical tests of a comparable DoE-driven campaign.

For battery teams facing pressure on cost, timelines, and capacity, this is where AI has proven most effective: accelerating learning across complex chemistry trade-offs while keeping experimental control firmly with engineers.

Use Case 5: Anomaly Detection in Battery Pack Testing - Where It Works and Where It Doesn’t

As engineering programmes have grown more complex, the volume and rate of data capture have increased significantly. Engineers increasingly rely on automated methods to validate test data and surface issues early, rather than attempting exhaustive manual review.

This is particularly true in battery testing, where large volumes of high-frequency measurements across voltage, temperature, current, and control signals are captured continuously.

These experiments are costly and time-consuming, and when issues such as sensor faults, wiring problems, or test setup errors are discovered late in a programme, the impact can be substantial. Entire test campaigns may need to be reworked, delaying critical decisions.

For these reasons, battery monitoring is a natural candidate for anomaly detection provided it is applied with realistic expectations.

In 2025, anomaly detection was evaluated across many battery programmes, with mixed results. It delivered clear value only in environments where the engineering foundations were already in place:

- Well-defined and repeatable test routine

- Consistent, well-instrumented pack-level measurements across voltage, temperature, current, and control signals

- Sufficient high-quality historical data to establish meaningful baseline

In these cases, models learned normal operating behaviour across multiple signals and flagged deviations early, allowing engineers to identify test errors, sensor drift, or abnormal pack behaviour before those issues influenced validation decisions or downstream analysis.

Where the approach fell short was equally instructive. Programmes struggled to move beyond pilots when:

- Testing was conducted at small scale or with frequently changing conditions

- Metadata was incomplete or inconsistently recorded

- Data pipelines were fragile or poorly maintained

The takeaway from 2025 was pragmatic. Anomaly detection is not a general solution for battery pack testing. It is a targeted capability that works when data quality, test discipline, and documentation are already in place. As battery testing infrastructure continues to mature, interest remains high.

To see practical examples of where anomaly detection adds value in battery testing, including common test errors it can surface and the limitations to be aware of, watch the webinar here:

Webinar: 4 Hidden Battery Testing Errors You Can Detect with AI.

Key Focus Areas of AI in Engineering in 2026

The pattern from 2025 is clear. Most engineering teams are no longer evaluating whether AI can help. The focus has shifted to whether it can be deployed reliably inside real test and validation operations.

In 2026, the work moves from models to systems. That means taking approaches that already work and turning them into repeatable, governed workflows: automated data pipelines, model retraining and monitoring, clear confidence thresholds, and traceability that allows outputs to be reviewed, defended, and signed off by accountable engineers.

Demand is concentrating around the same bottlenecks we saw throughout 2025. Calibration workloads under fixed test capacity. Test plan reduction that teams can justify internally.

Battery and telemetry-heavy programmes where data quality directly affects downstream decisions. These areas matter not because they are new, but because small improvements compound quickly when applied at scale.

What has changed is not the ambition, but the maturity. Teams are increasingly willing to act on AI outputs when those outputs fit existing workflows, respect engineering judgement, and come with the governance needed to operate in regulated or safety-critical environments.

Where scaling still fails is also clear. Inconsistent test procedures, missing metadata, fragile data pipelines, and unclear ownership continue to block adoption. Successful programmes treat AI deployment as an engineering change, not a tooling exercise. Data, process, and decision ownership are aligned before models are rolled out.

If you are moving from pilots to production, the question is no longer whether AI can help, but where it will create measurable capacity, risk reduction, or decision confidence in your organisation.

If that is a conversation you are ready to have, we would be happy to help.

Talk to one of our experts or book a discovery call to explore where AI can deliver the most value for your team.

2026 Strategic Imperatives

What changed in 2025 was not ambition, but maturity. Many AI use cases that once sounded compelling are now showing lower-than-expected ROI when applied inside real test and validation environments.

Overall motions in 2026 seem to fall within 2 main areas:

- Teams narrowing applications to what is justifiable against real constraints: data quality that already exists, test effort that is genuinely reduced, and outputs that engineers are willing to sign off. These are the use cases that survived pilots and earned trust in production programmes.

- Improving data quality and standards so that they can revisit other applications of AI when their data is of sufficiently high quality.

The most efficient way to see what works for your use case is to go through a use case matrix workshop to evaluate data readiness, test costs, risk exposure, and expected return before any deployment decision is made.

About the author

I’m a Chemical Engineering student from Imperial College London, working at Monolith to help engineers use AI to test less and learn more. I’m passionate about using self-learning models to optimise validation and drive innovation in systems like batteries, ECUs, and fuel cells.

I’m a Chemical Engineering student from Imperial College London, working at Monolith to help engineers use AI to test less and learn more. I’m passionate about using self-learning models to optimise validation and drive innovation in systems like batteries, ECUs, and fuel cells.